Subsections of Testing

Test Strategy

Scope

This document covers the testing and approval steps of the development activities including new features and bug-fixes.

Purpose

- To determine if the software meets the requirements

- To explain the software testing and acceptance processes, methods, and responsibilities

Software testing is an activity to investigate software under test in order to provide quality-related information to stakeholders. QA (quality assurance) is the implementation of policies and procedures intended to prevent defects from reaching customers. The test team applies testing and QA activities.

Responsibility & Authority

Client or Business Unit

- Makes demands by opening issues when required.

- Reviews and approves of the applications and processes developed.

Development Team & Project Manager

Carries out the application and process development of issues opened by business units or client.

Test Team

- Manages the test process and to ensure test activity in the company.

- Follows new technologies and methods and to evaluate their applicability.

- Evaluates the participation of manual tests in automation and performing maintenance activities of the automation tests.

Test Approach

QA process includes the following test activities;

-

Story/Requirement Review and Analysis: User stories or requirements which are defined by the customer or product owner should be tested (static test) by the test team. Logical mistakes, gaps should be reported.

- Test Object: Requirements or Stories

- Test Deliverables: Analyse report

-

Unit Testing: Usually performed by the development team unless there is a certain necessity for the test team. The purpose is to validate that each unit of the software code performs as expected.

- Test Object: The code

- Test Deliverables: Defects

-

Exploratory Testing: It is a testing activity where test cases are not created in advance but testers check the system on the fly.

- Test Object: The application

- Test Deliverables: Defects, Test Report

-

Feature Validation Tests: Testing the implementation of the user story, requirement or etc.

- Test Object: Part of the application

- Test Deliverables: Defects

-

Smoke Testing: It is preliminary testing to reveal simple failures severe enough to reject a prospective software release.

- Test Object: The application

- Test Deliverables: Defects, Test Report

-

Performance, Load, Stress, Accessibility Tests: Non-functional tests

- Test Object: The application

- Test Deliverables: Defects, Test Report

-

Integration Testing, System Testing, E2E Testing: Functional tests

- Test Object: The application or a part of the application

- Test Deliverables: Defects, Test Report

-

Change Based Testing: Regression Testing is the process of testing a system (or part of a system) that has already been tested by using a set of predefined test scripts in order to discover new errors as a result of the recent changes. The effects of software errors or regressions (low performance), functional and non-functional improvements, patches made to some areas of the system, and configuration changes can be monitored with regression testing. Retest is also performed after every bug-fix.

- Test Object: The application

- Test Deliverables: Defects, Test Report

-

User Acceptance Testing (UAT) UAT should be performed by a random tester at the end of every project to increase quality if the customer or the product owner will not or cannot run it. These tests should be focused on UX. The tester should have the end-user point of view. It should be very brief because the main aim is not to find all bugs. Ideally, UAT should be performed by the customer.

- Test Object: The application

- Test Deliverables: Suggestions, defects, test report

Other activities;

- Bug Triage: It is a process where each bug is prioritized based on its severity, frequency, risk, etc.

- Bug Root-Cause Analysis: Identifying defects

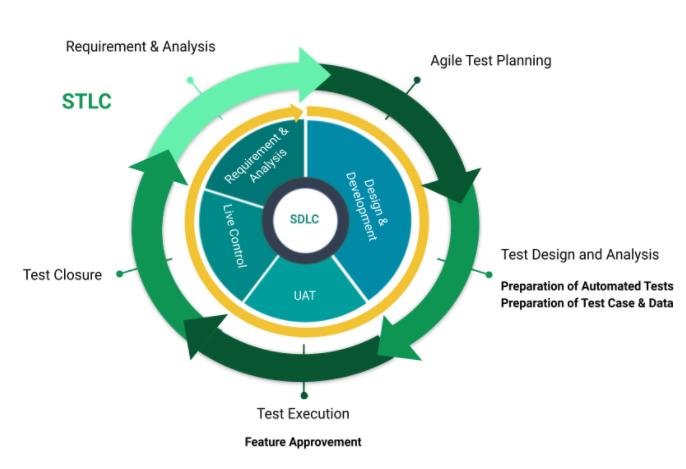

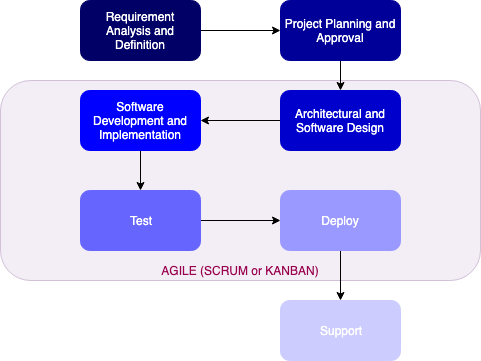

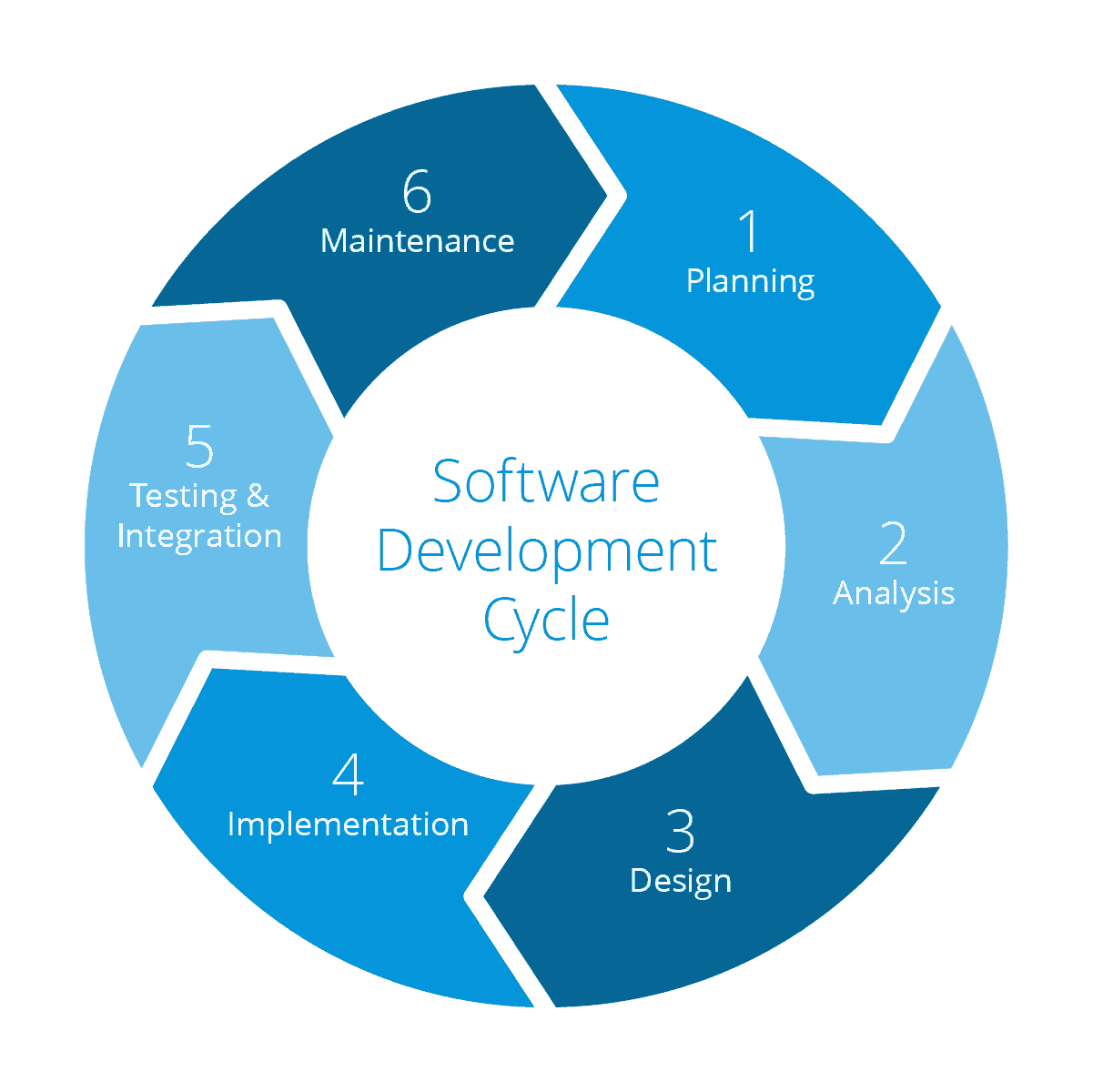

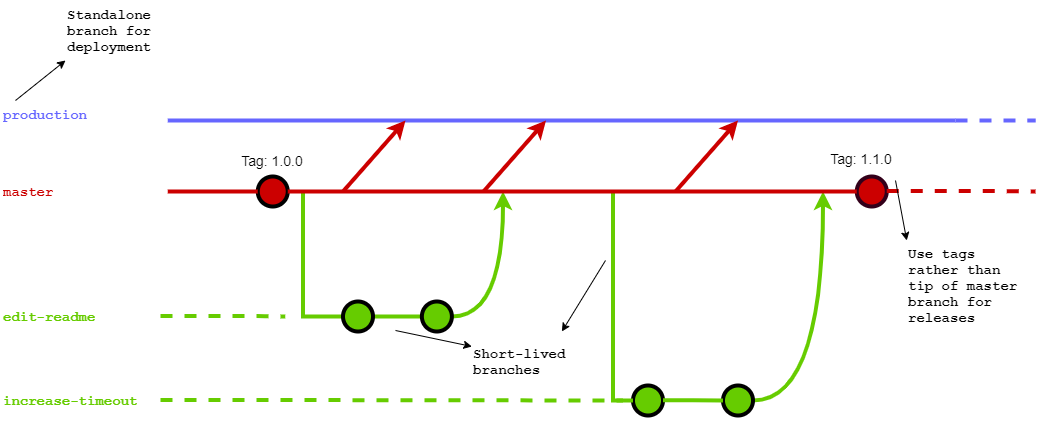

This process is called Software Test Life Cycle (STLC) in international standards. It is carried out in parallel with the Software Development Life Cycle (SDLC) process for BISS.

SDLC & STLC at BISS

The STLC process starts with the submission of the test request and the inclusion of the test team in the project as part of the system analysis studies. This process is continued and completed with Requirements Review, Design & Development, Feature Approvement, and Live Control.

Well-written requirements form the foundation of a successful project. The requirements review is an opportunity to check the requirements document for errors and omissions, and confirm that the overall quality of the requirements is acceptable before the project proceeds. It is also one final opportunity to communicate and confirm project requirements with all stakeholders. The test team carefully reviews all requirements.

Meanwhile, test plan preparation shall be started if requirements are clarified. According to the requirements, technologies to be used, test approach, test strategy, test types to be performed in each test level should be documented. Also, test entry and exit criteria should be defined. This document (test plan) shall not contain technical details which could be changed during the project. It shall be approved by the product owner and be read by all the stakeholders.

In the design and development phase, test scenarios are prepared and the prepared scenarios are reviewed by stakeholders if possible. At the same time, test data is prepared in order to run test scenarios.

Test environment preparation steps are started to be executed after test data is prepared. In this step, the environment in which the prepared test scenarios can be realized and the necessary tools are set up and adjusted. If test scenarios are automated, automatic test scripts shall be prepared in this step.

After test data and the test environment are prepared, functional testing and non-functional testing activities shall be performed.

Automated tests consist of scenarios prepared during the creation of automatic scripts and automated regression scenarios. Manual tests are based on testing the prepared scenarios like the end-user. Both are applied in order to provide optimum efficiency in the STLC process.

The smoke test is also carried out by the Test Team. This type of test is carried out in order to control the main functions of a product after deployment to any environment (test, production, etc.).

UAT tests should be performed by a test team member except project’s testers if it is not performed by the customer or the product owner.

All other non-functional tests like performance, load, stress or accessibility testing could be performed if needed in the project.

Error records are created in the Project Management Tool for detected defects. Resolved defects are tested if the entry criteria that is defined in the test plan is satisfied.

The test report containing the latest status of the project is prepared by the test team manually or automatically and shared with the PO, development team or all stakeholders, periodically. Its content and detail level should be decided by the development team.

Entry & Exit Criteria

Entry Criteria

Entry criteria are the required conditions and standards for work product quality that must be present or met prior to the start of a test phase.

Entry criteria include the following:

- Review of the completed test script(s) for the prior test phase.

- No open critical defects remaining from the prior test phase.

- Correct versioning of components moved into the appropriate test environment.

- Complete understanding of the application flow.

- The test environment is configured and ready.

Exit Criteria

Exit criteria are the required conditions and standards for work product quality that block the promotion of incomplete or defective work products to the next test phase of the component.

Exit criteria include the following:

- Successful execution of the critical test scripts for the current test phase.

- Test Cases had been written and reviewed.

- No open critical defects.

- Component stability in the appropriate test environment.

- Product Owner accepts the User Story.

- Functional Tests passed.

- Acceptance criteria met.

Test Glossary

Abbreviations

| Abrreviation |

Expansion |

| STLC |

Software Test Life Cycle |

| SDLC |

Software Development Life Cycle |

| ISTQB |

International Software Testing Qualifications Board |

| IEEE |

Institute of Electrical and Electronics Engineers |

Terms

| Term |

Explanation |

| Requirements traceability matrix |

A document that demonstrates the relation between requirements and other artifacts |

| Static test |

Tests that are run without executing the code. It consists of manual and automated reviews. |

| Dynamic test |

Tests that are run while the application is running. |

| Functional test |

Tests that check whether the application is working right. |

| Non-functional tests |

Tests that check whether the application is the right product that meets non-functional requirements like performance, usability, and etc. |

| Change-based testing |

Testing to verify the latest changes and/or their effects on the whole system. |

| White-box testing |

Tests which perform with consideration of internal structure of the software. |

| Black-box testing |

Tests which perform with consideration of specifications of the software. |

| Grey-box testing |

Tests which perform with consideration of specifications and internal structure of the software. |

| Regression test |

Testing an application that has been tested before to be sure that recent changes have not broken anything covered by the regression test suite. |

| Re-test (Confirmation Test) |

Testing a part of the application that contains an untested change that has been tested before. |

| Test data |

Data that was created during or before test design activities. It could be used by the test or application during test execution or implementation. |

| Test design |

A process that test objectives are converted into test deliverables like plans, scenarios, data. |

| Test execution |

The process contains running tests and validating actual and expected results. |

| Test implementation |

A process that test scenarios are detailed (with steps and test data) and/or automated. |

| Work product |

Any output in SDLC (analysis document, code, test scenario, etc.) |

| Author |

Work product creator. |

| Bug/Defect |

A mismatch that might cause a failure in production between the requirement and software. |

| Requirement |

Definition of what customer needs. |

| User Story |

Formatted requirements by using everyday or business language to explain what really user needs including non-functional requirements. It also has acceptance criteria. |

| Acceptance Criteria |

The criteria that software must satisfy to be accepted by the user. |

| Test Script |

Automated or manual scripts to use executing a test. |

Test Policy

Mission of Testing

Bosphorus Industrial Software Solutions (BISS) strongly believes that testing saves cost and time, while providing efficiency and quality to all software products. The main idea is to deliver the product in such a quality that the users will have the intended experience.

Definition of Testing

BISS defines Testing as validation and quality improvement of the product from ideation phase to the final delivery within all angles. Testing is an ongoing process together with other processes in SDLC.

Testing Approach

The testing approach is determined according to the project requirements, customer expectations, team knowledge, and project duration. More than one approach might be combined to perform tests in the project.

Test Process

Our process follows the IEEE and ISTQB standards.

This process applies as a general rule for all the projects at BISS. To see the equivalent steps in the STLC or SDLC of each activity please refer to the Test Strategy Document.

1. Requirement Testing

- Testing requirements, user stories, or analysis documents to see if they are ready for the development phase.

- Identifying types of testing to be performed. Please check Table 1 for further information.

- Preparation of the “requirements traceability matrix” (if possible).

2. Test Planning

- Preparation of test plan and other test documentation.

- Definition of entry and exit criteria.

- Selection of test tools.

- Estimation of the effort (include training time if needed).

- Determination of roles and responsibilities.

3. Test Case & Test Data Development

- Creating test cases (automated or/and manual test cases).

- Creating or requesting test data.

4. Test Environment Setup

This process can be started along with phase 3.

- Setting up the test environment, users, and data.

5. Test Execution

- Executing tests.

- Reporting bugs and errors.

- Analysing the root cause if possible.

6. Test Closure

Preparation of the test closure document which includes;

- Time spent.

- Bug and/or error percentage.

- Percentage of tests passed.

- The total numbers of tests.

In each step, there are entry and exit criteria that need to be met. These criteria usually vary from project to project but the common entry and exit criteria can be found in the Test Strategy Document.

7. Test Levels and Test Types

| Test Level |

Owner |

Test Types |

Objective(s) |

| Unit(Component) |

- Developer,

- Software Tester

|

- Functional Testing,

- White-box testing,

- Change-related testing:

- Re-testing,

- Regression testing

|

- Detect defective code in units,

- Reduce risk of unit failure in production

|

| Integration |

- Developer,

- Software Tester

|

- Functional Testing,

- White-box testing,

- Grey-box testing,

- Change-related testing:

- Re-testing,

- Regression testing

|

- Defect defects in unit interfaces,

- Reduce risk of dataflow and workflow failures in production

|

| System |

Software Tester |

- Functional Testing,

- White-box testing,

- Grey-box testing,

- Black-box testing,

- Change-related testing:

- Re-testing,

- Regression testing

- Non-functional testing:

- Performance,

- Load,

- Stress,

- Accessibility

|

- Detect defects in use cases,

- Reduce risk of the system behavior in a particular environment

|

| Acceptance |

- Software Tester,

- Product Owner,

- Customer

|

- Functional Testing,

- Black-box testing,

- Alpha testing,

- Non-functional testing:

- Performance,

- Load,

- Stress,

- Accessibility

|

To be sure the software is able to do required tasks in the real world. |

Scrum

To achieve the agility in our projects, we leverage the Scrum framework. The best traits that we see in scrum are being lightweight and simple to understand. It is true that Scrum is hard to master, however, since we adopted it from the day we started the company, we are highly motivated about using it to our benefit. Please spend a bit of time to go over the Scrum Guide for more detailed information on Scrum.

Scrum Values

- Courage: People are encouraged to speak their minds both on projects that they participate as well as at town hall meetings. We focus on empowering individuals to make their own decisions in order to be more successful on delivering high quality product.

- Focus: Everyone within the company focuses on meeting the goals of the Sprint and Scrum team. This would be on both delivering the high value as well as meeting the standards of team and company.

- Commitment: People within the company are committed to achieve both company and scrum team goals.

- Respect: People are independent and it is being respected by everyone within the team.

- Openness: The scrum team agrees to be open to each other in order to create an environment for self development as well as for a better product.

Scrum Events and Artifacts

- Sprint : Every project team works on their time framed sprints. Timebox might differ from team to team, however, it must be boxed.

- Sprint Planning : Each team has their own planning meetings with Product Owners, Scrum Masters and Development Teams. This will be the agreement meeting on what will be achieved in the next sprint.

- Daily Scrum : Each team would have their scheduled meetings everyday for updating their team members on what has been done the previous day and what is the plan for the current day. Attendance to Daily Scrum is crucial and the punishment for not attending the meeting more than twice within a month is decided by the team. (Baklava is highly encouraged.)

- Sprint Review : Held at the end of every sprint to show the increment to the PO as well as the stakeholders. Each time are required to have this meeting.

- Sprint Retrospective : This meeting is regarded as the low priority by most people using Scrum, yet, this is one of the most prioritized events that we want teams to be held. This meeting helps teams to grow and understand their weaknesses as well as their strengths.

- Backlog Refinement(Grooming) : This meeting is recommended yet, not mandatory. The owner and some, or all, of the rest of the team review items on the backlog to ensure the backlog contains the appropriate items, that they are prioritized, and that the items at the top of the backlog are ready for delivery.

This event ends up producing the artifacts that can be seen below:

- Product Backlog

- Sprint Backlog

- Increment

Transparency

There are two concepts that we apply in scrum in order to achieve transparency during the scrum and within artifacts.

-

Definition of Ready

-

Definition of Done

Acceptance Criteria can be used to leverage those two concepts.

How to Scrum?

At this point it is should be clear that all the things mentioned above are the things that we require from our project teams to apply to their projects. However, the time limits, number of project members are based on different requirements such as:

- Team structures

- Team size

- Clients needs

- Project complexity

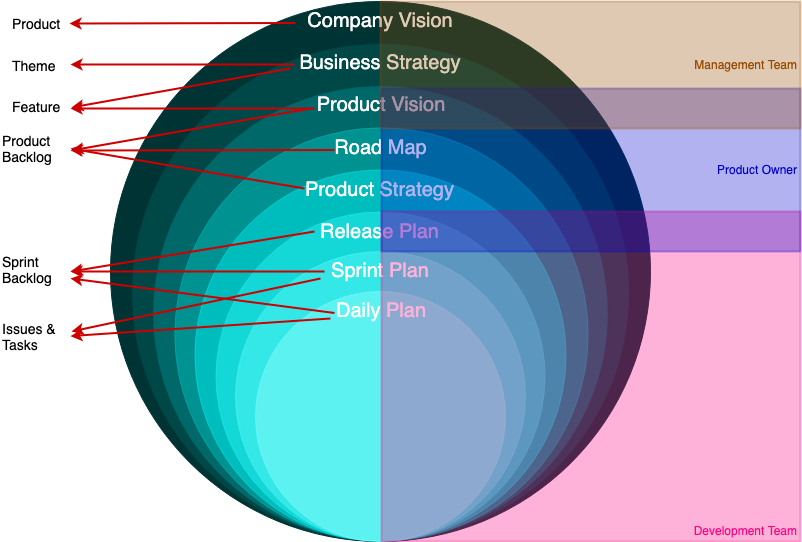

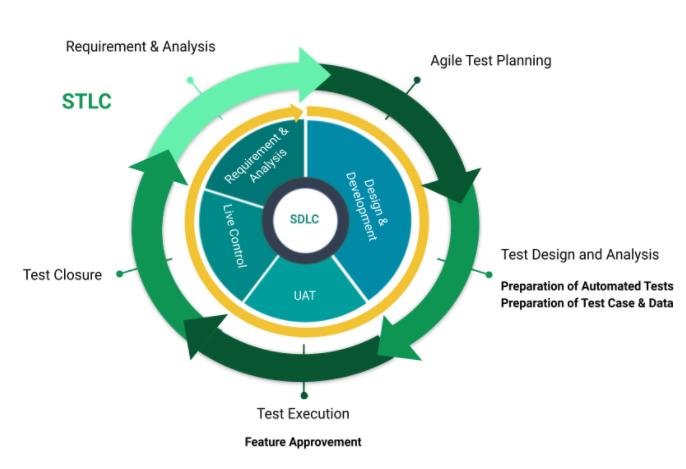

Scrum Methodology Requirement Levels

The requirement levels diagram below summarizes what are the responsibilities for each entity as well as where the requirements fall under scrum.

Subsections of Scrum

Sprint Planning

Frequency: Once per sprint

Meeting length: 2 - 8 hours

What is Agile Planning?

Agile planning is focused on getting the answers to simple questions like

- What is to be built?

- When will it be completed?

- How much will it cost and who should be involved?

The project managers also explore hidden dependencies for various activities to minimize the idle time and optimize delivery period. Agile planning revolves around measuring the velocity and efficiency of an Agile team to assess when it can turn the user stories into processes, production-ready software, and quality product delivery. The ultimate goal of Agile planning is to have a clear picture of project vision, production roadmap with sprint schedule, and business interests. To simplify the things, Agile planning can be stipulated of different levels;

- product vision

- product roadmap

- release

- iteration

- daily commitment.

Level 1: Agile Planning For Product Vision – Five Tips

Agile planning starts with product vision creation ensuring that strategies are aligned properly and the development team spends its time on creating the right valuable product. The product vision guides the team members for the shared goal like a lighthouse. The product vision statement tells about ‘how the product supports organization’s strategies.’ You can simplify the process of Agile product vision development by making it a four-step exercise – development, drafting, validation, finalizing. The following five tips will help you to get the most out of it:

- Product vision should deliver the unique feel of ownership to keep you motivated.

- Validate your product vision with all the stakeholders, Scrum team members, users etc.

- Develop the product vision iteratively & incrementally with the scope to make it better over time.

- The product vision pitch should address all the key concerns of different stakeholder groups pertaining to quality, product goals, competition, longevity and maintenance needs etc.

- Focus your product vision on the values for users and customers not merely on the most advanced technology.

Level 2: Agile Product Roadmap Planning– Five Tips

An Agile product roadmap is a plan that describes the way the product is likely to grow; it also facilitates for learning to change. To succeed in Agile management, you need to have a goal-oriented roadmap as it provides crucial information about everyday work by the team. As a powerful Agile management tool, it helps to align all the stakeholders and to estimate sufficient budget for quality product development as per schedule. Creating effective roadmap is often a challenge because changes occur unexpectedly in an Agile environment; however, the following five tips will help you plan the most effective roadmap:

- Do all the necessary prep work including describing & validating the product strategy. To know more about ‘Product strategy in the Agile world’, visit - https://svpg.com/product-strategy-in-an-agile-world/ .

- Focus your product roadmap on goals, benefits, objectives, acquiring customers, removing technical debt and increasing engagement etc.

- Let your product roadmap tell a coherent story about the likely development of a product. To simplify the task, you can divide the product roadmap into two parts- internal product roadmap and external roadmap. The internal product roadmap is focused on development, service, supporting groups, marketing, sales etc; while, the external roadmap is focused on prospective & existing customers.

- Keep the product roadmap simple and realistic to make the features understood by everyone concerned.

- Make your product roadmap measurable; so, think twice before adding timelines and deadlines.

Level 3: Release Planning – Five Tips

In Agile landscape, the release is a set of product increments released to the customer. The release plan defines how much work your team will deliver by the mentioned deadline. Release planning is the collaborative task involving Scrum master (facilitates the meeting), Product owner (shares product backlog view), Agile team (provides insights into technical dependencies & feasibility) and Stakeholders (the trusted advisors). The following five tips will help you in effective release planning:

- Focus on goals, benefits, and results.

- Take dependencies and uncertainties into account.

- Release early but don’t release just for the sake of scheduled releasing.

- Only release the work that is ‘Done’. To know more about ‘Definition of Done (DoD)’ in Agile, plz visit - https://www.knowledgehut.com/blog/agile-management/definition-of-done-use-in-agile-project .

- Each release process has the scope for betterment. Continuous release process improvement helps you deliver more values for the product.

Level 4: Iteration Planning - Five Tips

The iteration planning is done by holding a meeting, where all the team members determine the volume of backlog items they can commit to deliver during the next iteration. The commitment is made according to the team’s velocity and iteration schedule. The following five tips will help you in effective iteration planning:

- Span the iteration planning meeting maximum up to 4 hours.

- The planning meeting is organized by the team and for the team; so, everyone should participate.

- Avoid committing anything that exceeds the historical team’s velocity.

- Keep time for ‘retrospectives’ in the past sprints before planning for the next one. To know more about ‘Agile retrospective’, visit - https://www.agilealliance.org/glossary/heartbeatretro/.

Follow the four principles prepare, listen, respect & collaborate

Level 5: Daily Commitment Planning– Five Tips:

Like many other planning activities for Agile management, the daily commitment planning also needs the synchronized partnership of teams. The daily planning meeting is focused on completing the top-priority features. The 15-minute standup meeting facilitates face-to-face communication on individual’s progress and impediments if any. The following five tips will help you in progress-oriented daily commitment planning:

- Keep it around the task board.

- Start on time regardless of who is present or not.

- Let each team member go through the questions like - what he did yesterday, what is his plan for today, and, is there any impediment?.

- Use ‘Parking Lot’ for the unresolved issues. The purpose of daily Agile-Scrum planning is to let the team members know about ‘being done’, ‘needs to be done’ and ‘hindrance if any’. Anything out of this scope should be listed in ‘Parking Lot’ to be dealt later.

- Do preparation ahead of time. The team members should know ‘what they need to share’.

Daily Scrum Meeting

Frequency: Daily

Meeting length: 10 - 15 minutes

Scrum meetings are a part of agile meetings, or scrum ceremonies, where all team members can sync up on the work they did in the last 24 hours, and go over what’s on deck for the next 24 hours.

Daily Scrum Meeting Checklist

Blockers

Is there anything preventing contributors from getting work done? Things to bring up here might be technical limitations, departmental and team dependencies, and personal limitations (like vacations booked, people out sick, etc.).

What did you do yesterday?

This is a quick rundown of what got done yesterday (and if anything didn’t get done, then why). This isn’t the time for each person to run down their whole to-do list – they should focus on the large chunks of work that made up their deep focus time, and the activities that are relevant to your team as a whole.

What are your goals for today?

Here, each team member will say what they want to accomplish – in other words, what they can be held accountable for in tomorrow’s daily scrum meeting.

How close are we to hitting our sprint goals? What’s your comfort level?

This agenda item will help the scrum master get an idea of how the team is feeling about how their day-to-day activities are impacting overall goals for the team, and how contributors are feeling about the pace of the sprint.

What to avoid in your daily scrum?

Lateness

Being late for any meeting is disruptive, but it’s particularly distracting when the meetings are only 10 minutes long. Hold your team accountable for being on time, every time.

Monopolizing the conversation

Giving each team member the opportunity to talk is hugely important. Reinforce this at the start of each scrum if you find that certain team members have a tendency to ramble on.

Don’t over-complicate your scrum meetings. Keep the agenda the same each time you meet, and make the technology for meeting consistent. Daily scrum meetings involve less than 10 minutes of meeting prep, max.

Other daily scrum meeting tips:

- Hold your daily scrums in the morning and at the same time each day

- Do you daily stand-ups in person (standing up) where possible. If that’s not possible, use a reliable and consistent tool for communicating remotely (we love a good Slack Bot for this).

- Let the team lead the conversation

- Be a time cop and don’t let your stand-ups run more than 10 minutes

Referenced from here.

Sprint Review

Frequency: Once per sprint

Meeting length: 1 - 4 hours

Sprint Review or a Demo?

Many of those practicing Scrum mistakenly call the Sprint Review a Demo. Is it just a matter of terminology? From my point of view, the Sprint Review is the most underestimated Scrum Event, and for many companies, its potential is yet to be revealed. It is true that the Demonstration or Demo is an essential part of the Sprint Review, but it isn’t the only one.

The Sprint Review is much more than just a Demo.

Let’s find out why.

Who attends the Sprint Review

The Scrum Team and stakeholders (users, other teams, managers, investors, sponsors, customers, etc.) One could say that we invite the whole world to the Sprint Review and this is absolutely true.

How the Sprint Review Is Conducted:

- The Product Owner opens the meeting and tells the attendees what the Development Team completed during the current Sprint (what has been “Done” and “not Done”).

- The Development Team demonstrates the “Done” functionality (Demo), answers the questions and discusses problems they met on their way.

- The Product Owner discusses the current state of the Product Backlog, marketplace changes, and forecasts the likely release dates.

- The attendees collaborate on the Product Backlog Items, which can be completed during the next Sprint. Thus, the stakeholders get a shared understanding of the future work.

- A review of the budget, timeline, potential capabilities follows.

The Opportunity To Inspect And Adapt.

Sprint is one of the five official Scrum Events and is an opportunity for the Scrum Team to practice empiricism. Here is a short summary of what we inspect and adapt during the Sprint Review.

Inspect: Sprint, Product Backlog, Increment, Marketplace, Budget, Timeline, Capabilities.

Adapt: Product Backlog, Release Plan.

Inspecting Is Much More Than Just An Increment.

The Sprint Review is not just about product demonstration, it is an inspection of the completed Sprint, the Product Backlog and the marketplace. Also, it is a place for reviewing budgets, timeline and capabilities. Each Sprint is an important risk mitigation tool and the Sprint Review is a decision point for the Product Owner.

Each Sprint can be the last one. The Product Owner makes a formal decision regarding financing the next Sprint during the Sprint Review.

Here are some decisions that can be formally made:

- Stopping/Pausing the development

- Financing the next Sprint

- Adding team(s) with an assumption that it will speed up the development

- Releasing Increment (can be done anytime during the Sprint)

Is My Sprint Review Good Enough?

Scrum is based on iterative and incremental development principles, which means getting feedback and making continuous updates to the Product Backlog. Unfortunately, teams often forget about it.

The Sprint Review is a great tool for getting feedback, while the number of changes to the Product Backlog after the Sprint Review can be an important indicator of how healthy your Scrum is.

Why Many Scrum Teams Don’t Go Beyond Demo

Many teams/companies underutilize the entrepreneurial spirit of Scrum and its concept where the Product Owner is a mini-CEO of the Product. Very often, stakeholders attend only the Increment demonstration (usually done using a projector) with few questions asked and then everybody leaves shortly afterwards. This may happen for several reasons:

- The Product Owner doesn’t actually “own” the product, cannot optimize the ROI or make strategic decisions about the product, and is, in fact, a Fake Product Owner (FPO). During the Sprint Review, stakeholders (with actual Product Owner among them) often “accept” the Scrum Team’s work.

- The company’s business and development departments continue to play the “contract game” with predefined scope and completion dates. In this case, the Sprint Review inevitably becomes a status meeting.

- Superficial Scrum implementation at the company.

- The Product Owner doesn’t collaborate enough with stakeholders.

- This is a case of “fake Scrum”, when the Scrum Team handles only a part of the system with inputs and outputs and not the real product. There is nothing to show and nothing to give feedback on.

Good Sprint Review Practices

- An informal gathering with coffee and cookies that looks more like a meetup, often held as an Expo or a Review Bazaar.

- The Product Backlog is updated after/during the Sprint Review.

- The meeting is often attended by the end users.

- The Development Team communicates directly with end users and stakeholders and gets direct feedback from them.

- The “Done” product is showcased on workstations where the stakeholders can play with the new functionality.

Signs of An Unhealthy Sprint Review

- A formal/status meeting.

- The new functionality is demonstrated only in a slide show.

- The Product Owner “accepts” the work completed by the Development Team.

- The Development Team isn’t (fully) present.

- Neither are stakeholders and/or end users.

- The demonstrated functionality does not meet the Definition of “Done”.

- The Product Backlog is not updated. The Scrum Team works with a predefined scope.

- The stakeholders “accept” the completed work from the Product Owner.

The Bottom Line

Well, I wrote quite a lot. Let’s sum it up.

- The Sprint Review is more than just a Demo. The Sprint Review is a place to discuss the marketplace changes, examine the completed Sprint as an event, update the release schedule, discuss the Product Backlog and the possible focus for the next Sprint. This is where the dialog between the Scrum Team and the stakeholders takes place and feedback on product Increment is obtained.

- The number of changes to the Product Backlog can be an important sign of how healthy your Scrum is.

Sprint Retrospective

Frequency: Once or twice per sprint (Not mandatory)

Meeting length: 1 - 4 hours

Sprint Retrospective is an opportunity for the Scrum Team to inspect itself and create a plan for improvements to be enacted during the next Sprint.

The Sprint Retrospective occurs after the Sprint Review and prior to the next Sprint Planning. This is at most a three-hour meeting for one-month Sprints. For shorter Sprints, the event is usually shorter. The Scrum Master ensures that the event takes place and that attendants understand its purpose. This is the opportunity for the Scrum Team to improve and all member should be in attendance.

During the Sprint Retrospective

The team discusses:

- What went well in the Sprint?

- What could be improved?

- What will we commit to improve in the next Sprint?

Expected Output

The Scrum Master encourages the Scrum Team to improve its development process and practices to make it more effective and enjoyable for the next Sprint. During each Sprint Retrospective, the Scrum Team plans ways to increase product quality by improving work processes or adapting the definition of “Done” if appropriate and not in conflict with product or organizational standards.

By the end of the Sprint Retrospective, the Scrum Team should have identified improvements that it will implement in the next Sprint. Implementing these improvements in the next Sprint is the adaptation to the inspection of the Scrum Team itself. Although improvements may be implemented at any time, the Sprint Retrospective provides a formal opportunity to focus on inspection and adaptation.

Backlog Refinement (Grooming)

Frequency: Once or twice per sprint (Not mandatory)

Meeting length: 2 - 4 hours

Backlog refinement (formerly known as backlog grooming) is when the product owner and some, or all, of the rest of the team review items on the backlog to ensure the backlog contains the appropriate items, that they are prioritized, and that the items at the top of the backlog are ready for delivery.

Backlog Refinement Checklist

Prioritization

Items should be discussed and prioritized based on customer / market needs.

Decomposition of Items

The issues should be completed within a sprint, thus, if there are any issues that are not

Cleanup of Deprecated Items

Some of the issues may be deferred due to change of requirements or necessity of that feature, thus, cleanup of the backlog items can be done here.

What to avoid in your grooming?

Planning

This has its’ own meeting, attendees should not discuss the sprints that these items will be developed.

Old Issues - Completed Sprints

This meeting is to clean the backlog, completed items are not in scope of this meetings.

These can be done during retrospective, this has nothing to do with the grooming meetings.

Other grooming tips:

- PO can discuss priorities and requirements with stakeholders before the meeting.

- Weight (or any other measurement types) can be added during this meeting.

- Not everyone is needed in this meeting.

Referenced from here and here

Sprint

Frequency: Daily

Duration: 1 - 4 weeks

The heart of Scrum is a Sprint, a time-box of one month or less during which a “Done”, useable, and potentially releasable product Increment is created. Sprints have consistent durations throughout a development effort. A new Sprint starts immediately after the conclusion of the previous Sprint.

Sprints contain and consist of the Sprint Planning, Daily Scrums, the development work, the Sprint Review, and the Sprint Retrospective.

During the Sprint:

No changes are made that would endanger the Sprint Goal;

Quality goals do not decrease; and,

Scope may be clarified and re-negotiated between the Product Owner and Development Team as more is learned.

Each Sprint may be considered a project with no more than a one-month horizon. Like projects, Sprints are used to accomplish something. Each Sprint has a goal of what is to be built, a design and flexible plan that will guide building it, the work, and the resultant product increment.

Sprints are limited to one calendar month. When a Sprint’s horizon is too long the definition of what is being built may change, complexity may rise, and risk may increase. Sprints enable predictability by ensuring inspection and adaptation of progress toward a Sprint Goal at least every calendar month. Sprints also limit risk to one calendar month of cost.

Cancelling a Sprint

A Sprint can be cancelled before the Sprint time-box is over. Only the Product Owner has the authority to cancel the Sprint, although he or she may do so under influence from the stakeholders, the Development Team, or the Scrum Master.

A Sprint would be cancelled if the Sprint Goal becomes obsolete. This might occur if the company changes direction or if market or technology conditions change. In general, a Sprint should be cancelled if it no longer makes sense given the circumstances. But, due to the short duration of Sprints, cancellation rarely makes sense.

When a Sprint is cancelled, any completed and “Done” Product Backlog items are reviewed. If part of the work is potentially releasable, the Product Owner typically accepts it. All incomplete Product Backlog Items are re-estimated and put back on the Product Backlog. The work done on them depreciates quickly and must be frequently re-estimated.

Sprint cancellations consume resources, since everyone regroups in another Sprint Planning to start another Sprint. Sprint cancellations are often traumatic to the Scrum Team, and are very uncommon.

Concepts

Subsections of Concepts

Definition of Ready

What is Definition of Ready?

Definition of Ready is the properties required for a task to be started. It has to be clear, open and transparent.

What are the perspectives that have to be covered?

- User Story / Task

- Sprint

- Test

User Story / Task Perspective

User story has to cover all necessary items which are based on INVEST principles.

Invest principles items;

- Independent(of all others)

- Negotiable(not a contract for specific feature)

- Valuable(a vertical slice - feature ownership)

- Estimable(to decent approximation)

- Small(enough to fit in a single iteration)

- Testable(even if the tests don’t exist yet)

Accept an user story is ready, below items have to covered by the user story;

- The conditions of satisfaction have been fully identified for the story.

- The story details have to be understandable by the all scrum team.

- The story has been estimated and is under a certain size which is defined by the scrum team.

- The team’s user interface designer or Product Owner has mocked up, or even fully designed, any screens affected by the story.

- All external dependencies have been resolved, whether the dependency was on another team or on an outside vendor.

- Test environment or devices which is related the story, have to be ready.

Sprint Perspective

- Prioritized sprint backlog

- Defects, user stories and other work the team has committed to are contained in the sprint backlog

- No hidden work, all backlog items have to be detailed enough for next sprint.

- All team members have calculated their individual capacity for the sprint

- All users stories meet the definition of Ready.

Test Perspective

How the Definition of Ready should be?

Definition of Ready may not be covered all items which ared listed above. Project members have to select necessary items for their and customer needs. The items can be extended and teams can add their own custom rules to team’s definition of ready list. The most important rule is, each team member has to be beware of the definition of ready and follow the rules.

Definition of Ready Example

Definition of Done

What is Definition of Done?

The Definition of Done is an agreed upon set of items that must be completed before a project, user story, test case, deployment steps etc. can be considered complete. It is applied consistently and serves as an official gate separating things from being “in progress” to “done.”

What are the perspectives that have to be covered?

- Development

- Test

- Deployment

- Documentation

Development Perspective

- Code have to be written by following defined code formatting.

- Comments have to be added to explain complex solutions.

- Implementation have to be complete within defined code complexity levels

- Any other third party solution(Library, Framework etc.) which endangers CDP, should not be used in implementation.

- Before committing implementation, written code/solution have to be reviewed by at least other one developer.

- If implementation needs integration with another module and the module has not implemented during implementation, mock data or mock module should be used to test implementation.

Test Perspective

- Unit tests have to be written by developer.

- All edge cases have to be tested by the developers.

- If it is needed, to keep CI/CD pipeline alive, necessary updates have to be done on CI/CD configuration for tests.

Deployment Perspective

- Implementation has to be tested by the testers before deployment.

- Before deployment, tester has to complete all regression tests to be sure that implementation is not effects any other modules.

- Before deployment, all automated tests have to be run. If test coverage below defined coverage percentage, implementation have to be rolled back.

- If implementation uses third party solutions/app, the packages should be added for deployment environment(Docker or Yocto scripts)

Documentation Perspective

- If implementation steps have complex solutions, developers have to write a document or update existing development documents to explain their solutions properly.

How the Definition of Done should be?

Definition of Done may not be covered all items which is listed above. Project members have to select necessary items for their and customer needs. The items can be extended and teams can add their own custom rules to team’s definition of done list. The most important rule is, each team member has to be beware of the definition of done and follow the rules.

Definition of Done Example

Acceptance Criteria

Acceptance criteria are the conditions that a software product must meet to be accepted by a user, a customer, or other system. They are unique for each user story and define the feature behavior from the end-user’s perspective. Well-written acceptance criteria help avoid unexpected results in the end of a development stage and ensure that all stakeholders and users are satisfied with what they get.

Main purposes of the Acceptance Criteria is clarifying the stakeholder’s requirements.

Some of the criteria are defined and written by the product owner when he or she creates the product backlog. And the others can be further specified by the team during user stories discussions after sprint planning.

-

Acceptance Criteria have to be written before development starts.

-

Acceptance Criteria shouldn’t be too narrow.

-

Acceptance Criteria have to be achievable in one sprint iteration.

-

Acceptance Criteria have to be measurable and not too broad.

-

Acceptance Criteria shouldn’t contain Technical Details

-

Acceptance Criteria have to be understandable by all Scrum Team members

-

Acceptance Criteria have to be testable

Acceptance Criteria should contain;

-

Feature scope detalization

Acceptance Criteria define the boundaries of user stories. They provide precise details on functionality that help the team understand whether the story is completed and works as expected.

-

Describing negative scenarios

Acceptance Criteria may require the system to recognize unsafe password inputs and prevent a user from proceeding further. Invalid password format is an example of a so-called negative scenario when a user does invalid inputs or behaves unexpectedly. Acceptance Criteria define these scenarios and explain how the system must react on them.

-

Setting communication

Acceptance criteria synchronize the visions of the client and the development team. They ensure that everyone has a common understanding of the requirements: Developers know exactly what kind of behavior the feature must demonstrate, while stakeholders and the client understand what’s expected from the feature.

-

Streamlining acceptance testing

Acceptance criteria are the basis of the user story acceptance testing. Each acceptance criterion must be independently testable and thus have a clear pass or fail scenarios. They can also be used to verify the story via automated tests

-

Feature estimation

Acceptance criteria specify what exactly must be developed by the team. Once the team has precise requirements, they can split user stories into tasks that can be correctly estimated.

Acceptance Criteria Template for User Story

Scenario the name for the behavior that will be described

Given the beginning state of the scenario

When specific action that the user makes

Then the outcome of the action in “When”

And used to continue any of three previous statements

Example;

User story: As a user, I want to be able to recover the password to my account, so that I will be able to access my account in case I forgot the password.

Scenario: Forgot password

Given: The user has navigated to the login page

When: The user selected forgot password option

And: Entered a valid email to receive a link for password recovery

Then: The system sent the link to the entered email

Given: The user received the link via the email

When: The user navigated through the link received in the email

Then: The system enables the user to set a new password

Source: https://www.altexsoft.com/blog/business/acceptance-criteria-purposes-formats-and-best-practices/

Subsections of Source Control Management

Commit Messages

Commit messages should be self explanatory with enough detail for developers, maintainers, testers and every other member of the development team to understand what exactly has changed with each commit.

-

Commit type that will give first hints about commit change.

- “[+]”: New feature

- “[#]”: Bug fix

- “[~]”: Refactoring, general changes or others that doesn’t fit first two

-

Summary is the must have and it has two parts in it. First part is the name of the module and the second part is the summary of the change.

-

Description is made out of several parts, it can be considered as a template of explaining, giving enough detail on the changes about:

- how algorithm works

- what are the new dependencies

- how this change solves the problem/creates solution

- additional notes

- relevant links (issues, other MRs etc.)

- co-authors.

-

Use the imperative mood in the summary line while writing commit messages. A properly written summary line for a commit message should be able to complete the following sentence: “This commit will …”.

- This commit will change the search algorithm.

- This commit will

the search algorithm is changed.

Other than summary, all the parts are optional yet, it is highly encouraged to use them. Template and example can be found below:

template:

[~#+] Module Name Abbreviation(if needed): Summarize the change in less than 50 characters

What is changed (if needed):

- Explain new algorithm.

- Explain new dependencies.

Because (if needed):

- Explain the reasons you made this change

- Make a new bullet for each reason

- Each line should be under 72 characters

Remarks (if needed):

Include any additional notes, relevant links, or co-authors.(if needed)

Issue: #AZURE_ISSUE_ID

example:

[~] search: Refactor algorithm

What is changed:

- The new algorithm's worst case O(n log(n)).

- "HyperSupperDupper Sort" library is not used anymore.

Because:

- Old algorithm was not optimized.

- System tend to crash with big data.

Remarks:

Corner cases were not covered. (see # 11231)

These changes should resolve issue # 1337.

This commit removed the getFirst(), so please stop using it!

Issue: #2019

Co-authored-by: cool.guy <cool.guy@bosphorusiss.com>

Check this article to read more about writing good commit messages.

Another cool git commit can be found here.

Versioning

Versioning helps us to create cornerstones in a project so that we can

understand the project state. In this part versioning and semantic

versioning will be discussed.

Semantic Versioning

Why do we need it?

As the bigger our systems grow, and the more packages we integrate into

our software; then releasing new package versions can start to become

problematic:

- Version Lock: If we specify dependencies too strict then we can’t

upgrade a package without upgrading all packages dependent on it.

- Version Promiscuity: If we specify dependencies too loose then we

are being wishful about compatibility between different versions.

Semantic versioning allows us to convey what have changed from one

version to the next, that way we can create clever dependency

specifications and keep developing and releasing new versions of our

packages without unnecessarily being have to update all dependent

packages.

What is it?

Semantic versioning offers a scheme as below:

[MAJOR.MINOR.PATCH]

Version Incrementing Rules

Increment the:

- MAJOR version when you make incompatible API changes. It MAY also

include minor and patch level changes. Minor and patch version reset

to 0 when major version incremented.

- MINOR version when you add functionality in a backwards compatible

manner. It MAY include patch level changes. Patch version reset to 0

when minor version incremented.

- PATCH version when you make backwards compatible bug fixes.

Remarks

- Software using semantic versioning MUST declare a public API. It

should be precise and comprehensive.

- MAJOR version 0 is for initial development. The public API should not

be considered stable.

- A bug fix is defined as an internal change that fixes incorrect

behavior.

Reference: Semantic Versioning Specification

Semantic Versioning in Action

In this part we will develop a hypothetical application gradually and

use semantic versioning along the way. Let’s say you’re building an

application that is called network-manager. It has one initial

requirement, it should return the IP address of network interface

wherever it runs and that functionality should be available under

/get-ip-address endpoint.

NOTE

There are several ways to communicate with the application. We can use

arguments/options, listen for keyboard strokes, start a server inside

and communicate via a client application. We’re not going into details

but the idea is that an application one way or another presents

interface to interact with the world around it and that part is called

public API. It’s important to define which part of your software

constitutes public API to use Semantic Versioning correctly.

After adding this functionality we can create a tag as v0.1.0 to point

initial version.

If you run the application and reach for /get-ip-address endpoint, it

responds as below:

{

"interface": "enp0s3",

"ip_address": "192.168.10.2/24"

}

After a certain amount of time you realized that it takes too much time

to respond. You find out the reason and send a bugfix. Time to pump the

version. This change was backward compatible because the application

still responds the same way. Also we didn’t add new functionality. So no

need to bump major or minor units, just increasing patch unit will be

enough. So the new version is v0.1.1

It’s time to bring new functionalities to the table ! You realized that

it would be a good idea to show MAC address and it should be reachable

via /get-mac-address endpoint. After developing this functionality time

to check if everything works fine.

So you run the application and ask for MAC address via /get-mac-address,

here is the response:

{

"interface": "enp0s3",

"mac_address": "0a:0b:0c:0x:0y:0z"

}

It looks fine and we should give a new version. For now we have two

different endpoints (/get-ip-address and /get-mac-address). Since we

didn’t touch /get-ip-address endpoint, it still behaves as before and

because we didn’t have any other than /get-ip-address, it’s okay to say

this change was backward compatible. Yet this is a new feature, so we

should bump minor unit. The new version is v0.2.0

Network-manager returns the first interface it reaches currently and

because we only got one network interface in our workstation that was

okay. But there might be more than one network interfaces where our

application runs. The new goal is returning all IP and MAC addresses if

applicable. After few changes, time to run basic checks in a different

environment where we got two physical network interfaces:

/get-ip-address responds with:

[

{

"interface": "enp0s3",

"ip_address": "192.168.10.2/24"

},

{

"interface": "enp0s4",

"ip_address": "192.168.11.2/24"

}

]

/get-mac-address responds with:

[

{

"interface": "enp0s3",

"mac_address": "0a:0b:0c:0x:0y:0z"

},

{

"interface": "enp0s4",

"mac_address": "1a:1b:1c:1x:1y:1z"

}

]

Before this change endpoints were returning inside JSON objects, but now

it returns JSON objects inside an array. This means consumer of the

network-manager should change their code to be compatible with new

version of network-manager and our change was not backward-compatible.

We have to bump the major unit. The new version is v1.0.0

Branching Strategy

BISS Branching Strategy

Branching strategy points to activites a project team should execute for integration, deployment and even delivery. In this part we will explain what we use for branching on daily basis and briefly touch on several branching strategy.

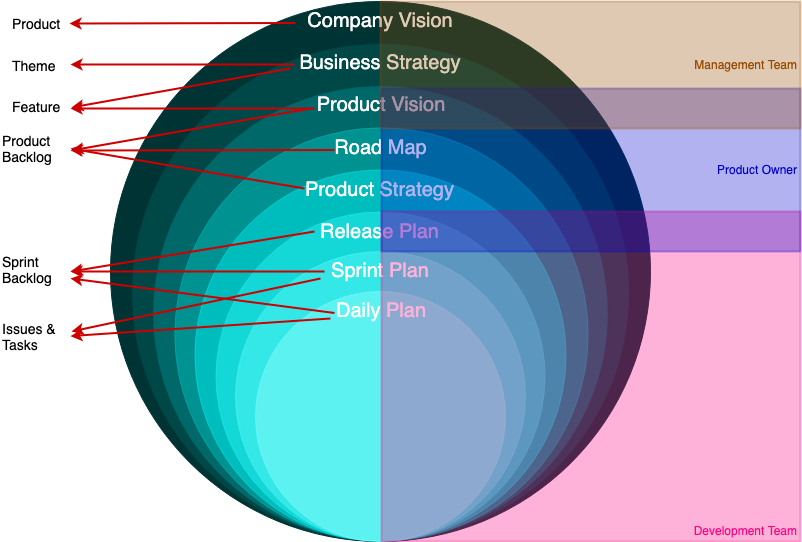

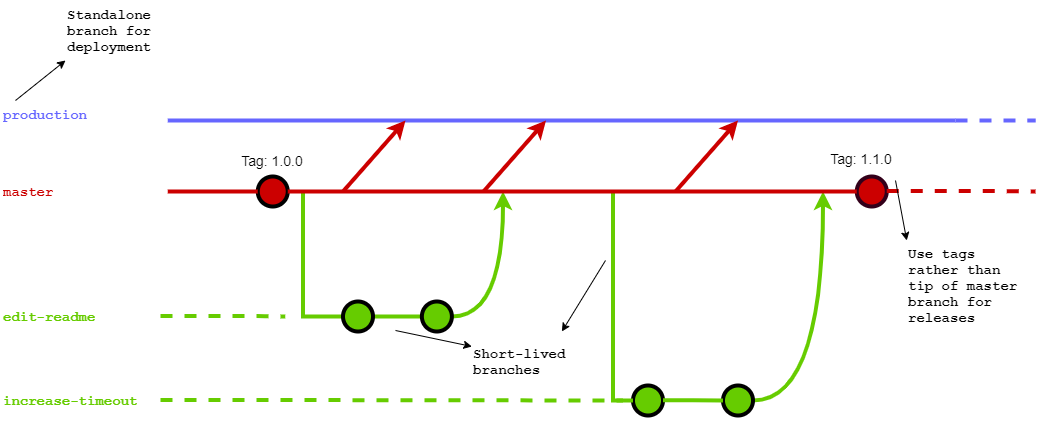

BISS Flow

This is the first workflow attempt that addresses multiple teams way of working. It’s not done by any means and should improve over time.

Let’s look at the usage of each branch:

- Production(purple) : This branch should point to production ready state of your software. Any merge request to production branch should result with a deployment to your prod environment

- Master(red) : Integration point for feature branches. Feature branches should split and merge back here. Tagging should be done in master branch, do not need to tag when a feature branch is merged but there should be a tagging policy.

- Feature(green) : Contains any improvement that will face customer/user soon.

Few more points:

- Since dev branch serves mostly as melting pot and master branch is already doing that, we decided not to use in BISS flow.

- Feature branch names should be brief but comprehensive and seperated with hyphen

- We should use rebasing when updating feature branches from master

- Testing should be done before merging back into master

- If supporting more than one version is necessary, different branches might be used to reflect those versions (Up until now we (here in BISS) haven’t maintain more than one version of a software. Since this scenario is not clear yet, it would be too vague and impractical to put into BISS flow)

Other Branching Strategies

Git Flow

This is the oldest approach that aims to solve integration problem. It has permanent master and develop branches and feature, release, hotfix branches that live limited amount of time. Releasing the software is the main goal and branches are created with this idea in mind. Depending on the circumstances, temporary branches might merge into master and/or develop branches.

Let’s briefly touch each branch:

- Master: Reflects what is alive at production

- Develop: Melting pot for feature branches

- Feature: Concentrates on a new feature

- Release: Provides room for release preparation

- Hotfix: Aims to solve unexpected problems at production

It has too many braches to think of, requires mostly manual steps to manage and demands git CLI knowledge.

GitHub Flow

This is the much simpler form of git flow. Instead of losing the momentum while playing branches it puts emphasize on deploying more often.

Master branch should be in such a state that is deployable at any time. Apart from a permanent master branch, there are temporary feature branches that brings new feature or bug/security fixes

into being.

It can be explained as below:

- Master branch should be reliable, don’t break its integrity

- Feature branches should have descriptive names, it should give the idea at first glance

- Push to remote server as much as possible, since everyone works at their own branch you cannot break anything

- Use pull requests for code review

- Merge(not rebase) after pull request is accepted

- Deploy frequently

Gitlab Flow

Gitlab flow is not so much different than GitHub flow in essence. It also utilizes permanent master and short-lived feature branches and offers merging feature branches back into master often. There are few points that make Gitlab flow different than git flow as well.

On top of what GitHub flow says, Gitlab flow offers few additional branches for different needs:

- Production branch: If deployment is not possible at a time, we can create a branch that reflects what should be in production environment. So team can continue working with master branch

- Environment branches: If there are other environments other than production, such as staging and pre-production, we can employ new branches with corresponding name to reflect those environments

- Release branches: If releasing(not deploying) is the need, then we can utilize release branches. Let’s say a bug fix is cruical, we should first try to merge into master and then cherry-pick into release branch. So that it doesn’t occur again and again

In order to prevent not needed merge commits, it offers rebasing when updating a branch from master, but when merging back into master branch opt for merging.

Since master branch is accepted as reliable, Gitlab flow promotes testing before merging back into master.

Merge Requests

A Merge Request (MR) is a request to merge one branch into another.

Use merge requests to visualize and collaborate on proposed changes to source code

How to create a merge request?

Before creating a merge request, read through an introduction to Merge Requests to familiarize yourself with the concept, the terminology, and to learn what you can do with them.

Every merge request starts by creating a branch. You can either do it locally through the command line, via a Git CLI application, or through the GitLab UI.

-

Create a branch and commit your changes

CLI Example

git checkout -b "feature_branch" // Create a local branch with checkout

You can also create a branch from Web Application, it depends on your work style. If you are creating a branch from GitLab UI and developing it from your favorite IDE; these commands will help you out:

git fetch

git checkout feature_branch

After creating a branch you should add your changes and commit your changes with commit message.

For example:

git add src/* // Add every changed file located at /src path

git commit -m "Add Login Endpoint."

-

Push your local branch to remote

git push --set-upstream origin feature_branch // Push your local branch to remote

Now you are ready to create your merge request.

-

Create a Merge Request

When you start a new merge request, regardless of the method, you are taken to the New Merge Request page to fill it with information about the merge request.

Important Notes:

*Always create merge request to source branch(the branch where you created new branch from).

For example if you created a branch from 'dev',open your merge request to 'dev'. Not 'master' or any other branches.

When creating merge request follow these steps listed below

-

Assign the merge request to a colleague for review. Generally more than one assignees are better.

-

Set a milestone to track time-sensitive changes.

-

Add labels to help contextualize and filter your merge requests over time.

-

Attach a related issue.

-

Add merge request dependencies to restrict it to be merged only when other merge requests have been merged.

-

Set the merge request as a Draft to avoid accidental merges before it is ready. While doing that you can use title prefix: WIP:.Don’t forget to add description line when it will be ready.

-

Write a description for changes (It’s also helps reviewers for understanding what are they looking at.)

While writing a merge request description be sure you are helping reviewer to what he/she is looking at. Otherwise you should okay with your colleagues closing your merge request without merging it.

Description Example:

## What does this MR Do?

* Adds Session Endpoint Paths to API Path List

* Reconfigure Public, User and Techinician Role Available API Path Lists.

* Adds Login, Logout and ValidateSession Endpoints

Closes #25!(Session Implementation)

- Once you have created the merge request, you can follow these subsections to finalize the work:

Merge Request Reviews

- Pipeline should successfully done.

- Check whether MR description is done.

- Review code carefully (Check How to review section)

- If you have any discussion point, comment each one as a different topic. (Check How to discuss section)

- If all threads/requested changes are resolved, merge it(Always FastForward).

How to review?

- Perform a Review in order to create multiple comments on a diff and publish them once you’re ready.

- Perform inline code reviews.

- Add code suggestions to change the content of merge requests directly into merge request threads, and easily apply them to the codebase directly from the UI.

- If everything looks ok, please approve the code with a comment so that new any other reviewer can see your comment and close the MR if needed.

How to discuss?

- Company public language is English. Be aware of that

- Always try to be constructive

- Explain every details of problem to transfer your idea/concerns clearly