Test Strategy

Scope

This document covers the testing and approval steps of the development activities including new features and bug-fixes.

Purpose

- To determine if the software meets the requirements

- To explain the software testing and acceptance processes, methods, and responsibilities

Software testing is an activity to investigate software under test in order to provide quality-related information to stakeholders. QA (quality assurance) is the implementation of policies and procedures intended to prevent defects from reaching customers. The test team applies testing and QA activities.

Responsibility & Authority

Client or Business Unit

- Makes demands by opening issues when required.

- Reviews and approves of the applications and processes developed.

Development Team & Project Manager

Carries out the application and process development of issues opened by business units or client.

Test Team

- Manages the test process and to ensure test activity in the company.

- Follows new technologies and methods and to evaluate their applicability.

- Evaluates the participation of manual tests in automation and performing maintenance activities of the automation tests.

Test Approach

QA process includes the following test activities;

-

Story/Requirement Review and Analysis: User stories or requirements which are defined by the customer or product owner should be tested (static test) by the test team. Logical mistakes, gaps should be reported.

- Test Object: Requirements or Stories

- Test Deliverables: Analyse report

-

Unit Testing: Usually performed by the development team unless there is a certain necessity for the test team. The purpose is to validate that each unit of the software code performs as expected.

- Test Object: The code

- Test Deliverables: Defects

-

Exploratory Testing: It is a testing activity where test cases are not created in advance but testers check the system on the fly.

- Test Object: The application

- Test Deliverables: Defects, Test Report

-

Feature Validation Tests: Testing the implementation of the user story, requirement or etc.

- Test Object: Part of the application

- Test Deliverables: Defects

-

Smoke Testing: It is preliminary testing to reveal simple failures severe enough to reject a prospective software release.

- Test Object: The application

- Test Deliverables: Defects, Test Report

-

Performance, Load, Stress, Accessibility Tests: Non-functional tests

- Test Object: The application

- Test Deliverables: Defects, Test Report

-

Integration Testing, System Testing, E2E Testing: Functional tests

- Test Object: The application or a part of the application

- Test Deliverables: Defects, Test Report

-

Change Based Testing: Regression Testing is the process of testing a system (or part of a system) that has already been tested by using a set of predefined test scripts in order to discover new errors as a result of the recent changes. The effects of software errors or regressions (low performance), functional and non-functional improvements, patches made to some areas of the system, and configuration changes can be monitored with regression testing. Retest is also performed after every bug-fix.

- Test Object: The application

- Test Deliverables: Defects, Test Report

-

User Acceptance Testing (UAT) UAT should be performed by a random tester at the end of every project to increase quality if the customer or the product owner will not or cannot run it. These tests should be focused on UX. The tester should have the end-user point of view. It should be very brief because the main aim is not to find all bugs. Ideally, UAT should be performed by the customer.

- Test Object: The application

- Test Deliverables: Suggestions, defects, test report

Other activities;

- Bug Triage: It is a process where each bug is prioritized based on its severity, frequency, risk, etc.

- Bug Root-Cause Analysis: Identifying defects

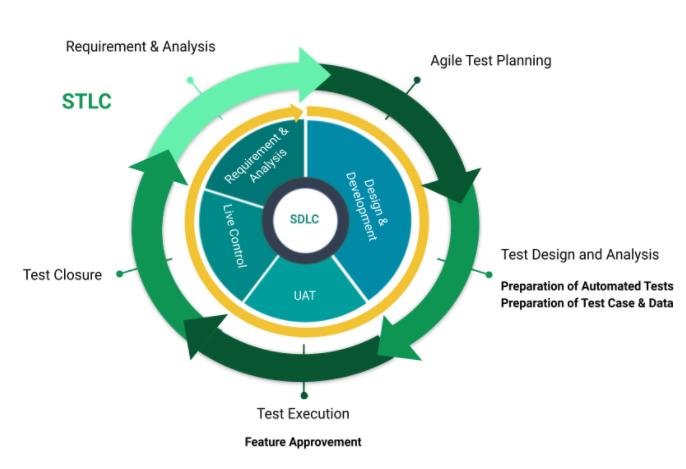

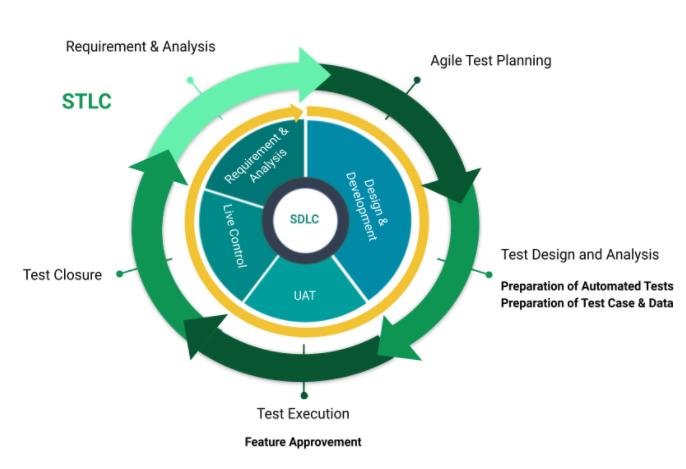

This process is called Software Test Life Cycle (STLC) in international standards. It is carried out in parallel with the Software Development Life Cycle (SDLC) process for BISS.

SDLC & STLC at BISS

The STLC process starts with the submission of the test request and the inclusion of the test team in the project as part of the system analysis studies. This process is continued and completed with Requirements Review, Design & Development, Feature Approvement, and Live Control.

Well-written requirements form the foundation of a successful project. The requirements review is an opportunity to check the requirements document for errors and omissions, and confirm that the overall quality of the requirements is acceptable before the project proceeds. It is also one final opportunity to communicate and confirm project requirements with all stakeholders. The test team carefully reviews all requirements.

Meanwhile, test plan preparation shall be started if requirements are clarified. According to the requirements, technologies to be used, test approach, test strategy, test types to be performed in each test level should be documented. Also, test entry and exit criteria should be defined. This document (test plan) shall not contain technical details which could be changed during the project. It shall be approved by the product owner and be read by all the stakeholders.

In the design and development phase, test scenarios are prepared and the prepared scenarios are reviewed by stakeholders if possible. At the same time, test data is prepared in order to run test scenarios.

Test environment preparation steps are started to be executed after test data is prepared. In this step, the environment in which the prepared test scenarios can be realized and the necessary tools are set up and adjusted. If test scenarios are automated, automatic test scripts shall be prepared in this step.

After test data and the test environment are prepared, functional testing and non-functional testing activities shall be performed.

Automated tests consist of scenarios prepared during the creation of automatic scripts and automated regression scenarios. Manual tests are based on testing the prepared scenarios like the end-user. Both are applied in order to provide optimum efficiency in the STLC process.

The smoke test is also carried out by the Test Team. This type of test is carried out in order to control the main functions of a product after deployment to any environment (test, production, etc.).

UAT tests should be performed by a test team member except project’s testers if it is not performed by the customer or the product owner.

All other non-functional tests like performance, load, stress or accessibility testing could be performed if needed in the project.

Error records are created in the Project Management Tool for detected defects. Resolved defects are tested if the entry criteria that is defined in the test plan is satisfied.

The test report containing the latest status of the project is prepared by the test team manually or automatically and shared with the PO, development team or all stakeholders, periodically. Its content and detail level should be decided by the development team.

Entry & Exit Criteria

Entry Criteria

Entry criteria are the required conditions and standards for work product quality that must be present or met prior to the start of a test phase.

Entry criteria include the following:

- Review of the completed test script(s) for the prior test phase.

- No open critical defects remaining from the prior test phase.

- Correct versioning of components moved into the appropriate test environment.

- Complete understanding of the application flow.

- The test environment is configured and ready.

Exit Criteria

Exit criteria are the required conditions and standards for work product quality that block the promotion of incomplete or defective work products to the next test phase of the component.

Exit criteria include the following:

- Successful execution of the critical test scripts for the current test phase.

- Test Cases had been written and reviewed.

- No open critical defects.

- Component stability in the appropriate test environment.

- Product Owner accepts the User Story.

- Functional Tests passed.

- Acceptance criteria met.

Test Policy

Mission of Testing

Bosphorus Industrial Software Solutions (BISS) strongly believes that testing saves cost and time, while providing efficiency and quality to all software products. The main idea is to deliver the product in such a quality that the users will have the intended experience.

Definition of Testing

BISS defines Testing as validation and quality improvement of the product from ideation phase to the final delivery within all angles. Testing is an ongoing process together with other processes in SDLC.

Testing Approach

The testing approach is determined according to the project requirements, customer expectations, team knowledge, and project duration. More than one approach might be combined to perform tests in the project.

Test Process

Our process follows the IEEE and ISTQB standards.

This process applies as a general rule for all the projects at BISS. To see the equivalent steps in the STLC or SDLC of each activity please refer to the Test Strategy Document.

1. Requirement Testing

- Testing requirements, user stories, or analysis documents to see if they are ready for the development phase.

- Identifying types of testing to be performed. Please check Table 1 for further information.

- Preparation of the “requirements traceability matrix” (if possible).

2. Test Planning

- Preparation of test plan and other test documentation.

- Definition of entry and exit criteria.

- Selection of test tools.

- Estimation of the effort (include training time if needed).

- Determination of roles and responsibilities.

3. Test Case & Test Data Development

- Creating test cases (automated or/and manual test cases).

- Creating or requesting test data.

4. Test Environment Setup

This process can be started along with phase 3.

- Setting up the test environment, users, and data.

5. Test Execution

- Executing tests.

- Reporting bugs and errors.

- Analysing the root cause if possible.

6. Test Closure

Preparation of the test closure document which includes;

- Time spent.

- Bug and/or error percentage.

- Percentage of tests passed.

- The total numbers of tests.

In each step, there are entry and exit criteria that need to be met. These criteria usually vary from project to project but the common entry and exit criteria can be found in the Test Strategy Document.

7. Test Levels and Test Types

| Test Level |

Owner |

Test Types |

Objective(s) |

| Unit(Component) |

- Developer,

- Software Tester

|

- Functional Testing,

- White-box testing,

- Change-related testing:

- Re-testing,

- Regression testing

|

- Detect defective code in units,

- Reduce risk of unit failure in production

|

| Integration |

- Developer,

- Software Tester

|

- Functional Testing,

- White-box testing,

- Grey-box testing,

- Change-related testing:

- Re-testing,

- Regression testing

|

- Defect defects in unit interfaces,

- Reduce risk of dataflow and workflow failures in production

|

| System |

Software Tester |

- Functional Testing,

- White-box testing,

- Grey-box testing,

- Black-box testing,

- Change-related testing:

- Re-testing,

- Regression testing

- Non-functional testing:

- Performance,

- Load,

- Stress,

- Accessibility

|

- Detect defects in use cases,

- Reduce risk of the system behavior in a particular environment

|

| Acceptance |

- Software Tester,

- Product Owner,

- Customer

|

- Functional Testing,

- Black-box testing,

- Alpha testing,

- Non-functional testing:

- Performance,

- Load,

- Stress,

- Accessibility

|

To be sure the software is able to do required tasks in the real world. |